Every now an then, I see a reddit post about someone asking how should one make or optimize falling sand on the GPU.

I used to read through github issues like this or that where people deemed the whole problem impossible. Either the reactions are too hard (branching), movement patterns become ugly (margolus pattern), or data transfer bottlenecks between CPU and GPU are too slow.

Even Noita’s creator said he didn’t choose GPU for the simulation being more comfortable with CPU.

Making a falling sand game to begin with, with commercial potential, is hard enough. Noita took ~7 years to make. Adding GPU complexity to the problem is just not recommended. Github is full of falling sand projects people gave up on. Projects which took years, most of them just simply CPU simulated. Even I have multiple of such failed projects.

I think though, the issue is not about the performance at all. It’s not about GPU or CPU bottlenecks. It’s about persistence. And sticking to your choices. It’s about taking as long as it takes. In the end, the simulation is only a miniscule part of the whole engine and the troubles you encounter along the way. I’m entirely comfortable with the idea of having to rewrite the simulation with CPU, if necessary. I’ve already done it so many times. Or any engine part. I’m even comfortable about throwing the whole simulation to the bin, if it serves the game.

I chose GPU simulation mostly out of curiosity and the challenge. I think it’s beginning to pay off. I list here some nice perks GPU based falling sand simulation can give you.

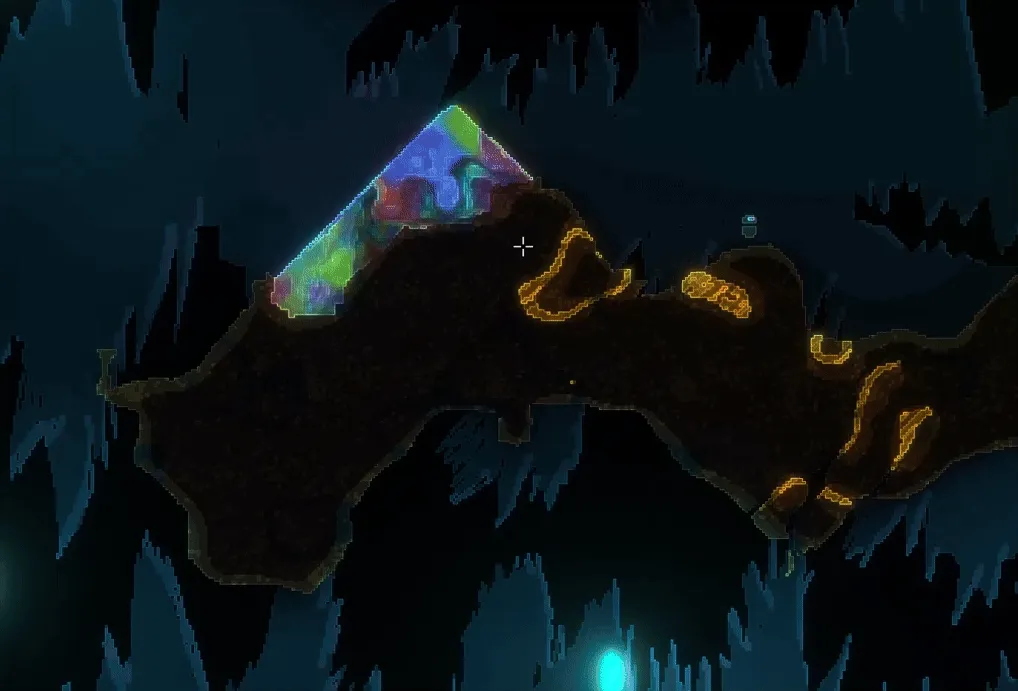

Everything is an image. You can transfer sprites and images into your simulation very easily. You’re not iterating every pixel, instead you write it all at once.

![]()

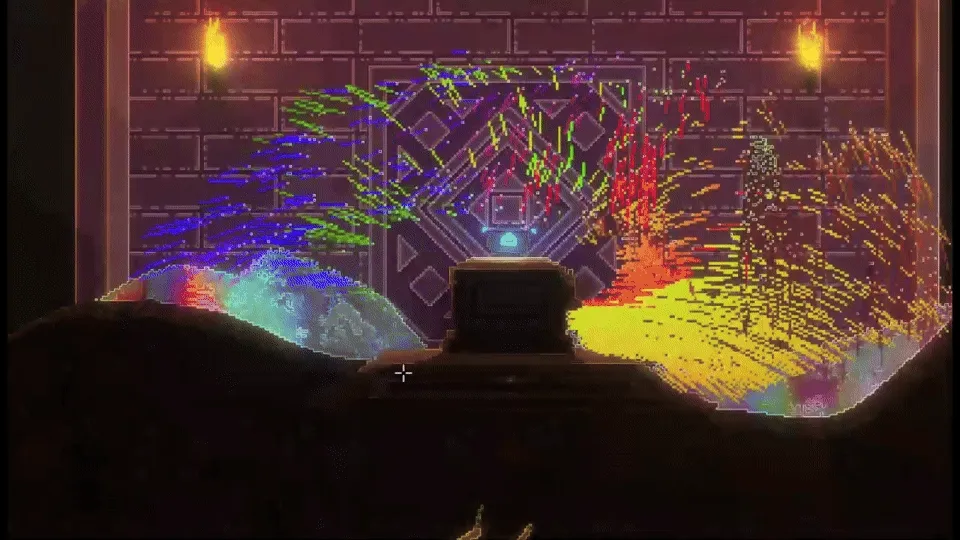

Explosions are free. As we draw things in parallel shaders, explosions are pretty much instant. I’m thinking of over the top chaos, leaving you wondering how they did that.

Particle interaction is immersive. Because particles are also on the GPU, we can transfer sand to particles, and particles to sand rather easily. This can make destruction feel like never before.

Debugging is much easier than you think. Just draw colors to debug image within your compute shader pipelines. This is one of the most useful things I added early on. Just being able to visualize whatever you need with colors or lines. In addition, I even added a number printer that converts integers to digits inside the shader. All these have made Renderdoc almost useless for me. I am often not interested in single frames. But many. The better you can debug, the faster you get to where you are going. This coupled with shader hot reload is great.

Falling sand simulation on the GPU is what we’ve chosen, and we are acutely aware of some of the cons too. Reading data back from the GPU, architecturing data between what it is on the CPU side, and what it is on the GPU side, double buffering or not (not). Thinking in pipelines, and steps, rather than just writing the logic. One of the hardest parts is how you chunk your world, and how you architecture the chunks themselves, and their simulation. Falling sand methods themselves are easy to solve, among other harder problems you would encounter if you make your own engine.

I would not recommend this path overall, for its difficulty. I would not recommend falling sand simulation games overall, for their difficulty.

But we’re here, halfway there. Don’t listen to others. Try yourself.